Metal, Core Image, UIKit, Then Progressive Blur

·

5 min read

tl;dr: Explore a workaround for applying a progressive blur to SwiftUI content hosted in UIKit views. This post details an architecture that combines Metal for generating precise blur masks using Signed Distance Fields (SDFs), Core Image for efficient kernel execution, and Core Animation for applying the variable blur effect, overcoming limitations of direct SwiftUI Metal integration.

Overview

Apple introduces Metal use in SwiftUI, but it’s limited for UIKit-backed views like ScrollView, which cause hard to render progressive blur directly over ScrollView views.

This post introduces a workaround inspired by a WWDC20 session, Build Metal-based Core Image kernels with Xcode.

It takes use of Core Image kernals to render the progressive blur.

Metal does not progressively blur the content directly, but just creates a mask for CAFilter blur.

┌────────────────────┐

│ SwiftUI Content │

└─────────┬──────────┘

│ apply modifier

▼

┌────────────────────┐

│ SwiftUI ⇄ UIKit │

│ bridge layer │

└─────────┬──────────┘

│ hosts

▼

┌────────────────────┐

│ UIKit Blur View │

└─────────┬──────────┘

│ needs mask

▼

┌────────────────────┐

│ CI Kernel + Metal │

│ (mask builder) │

└─────────┬──────────┘

│ grayscale mask

▼

┌────────────────────┐

│ CAFilter Blur │

│(progressive radius)│

└─────────┬──────────┘

│ composited

▼

┌────────────────────┐

│ Final Blurred │

│ Overlay │

└────────────────────┘Metal

We request Metal to draw a rectangle(like CGRect), and it first uses SDFs to compute the distances of each pixel from the rectangle edges. Then, it uses these distances to create a mask that is used by CAFilter blur.

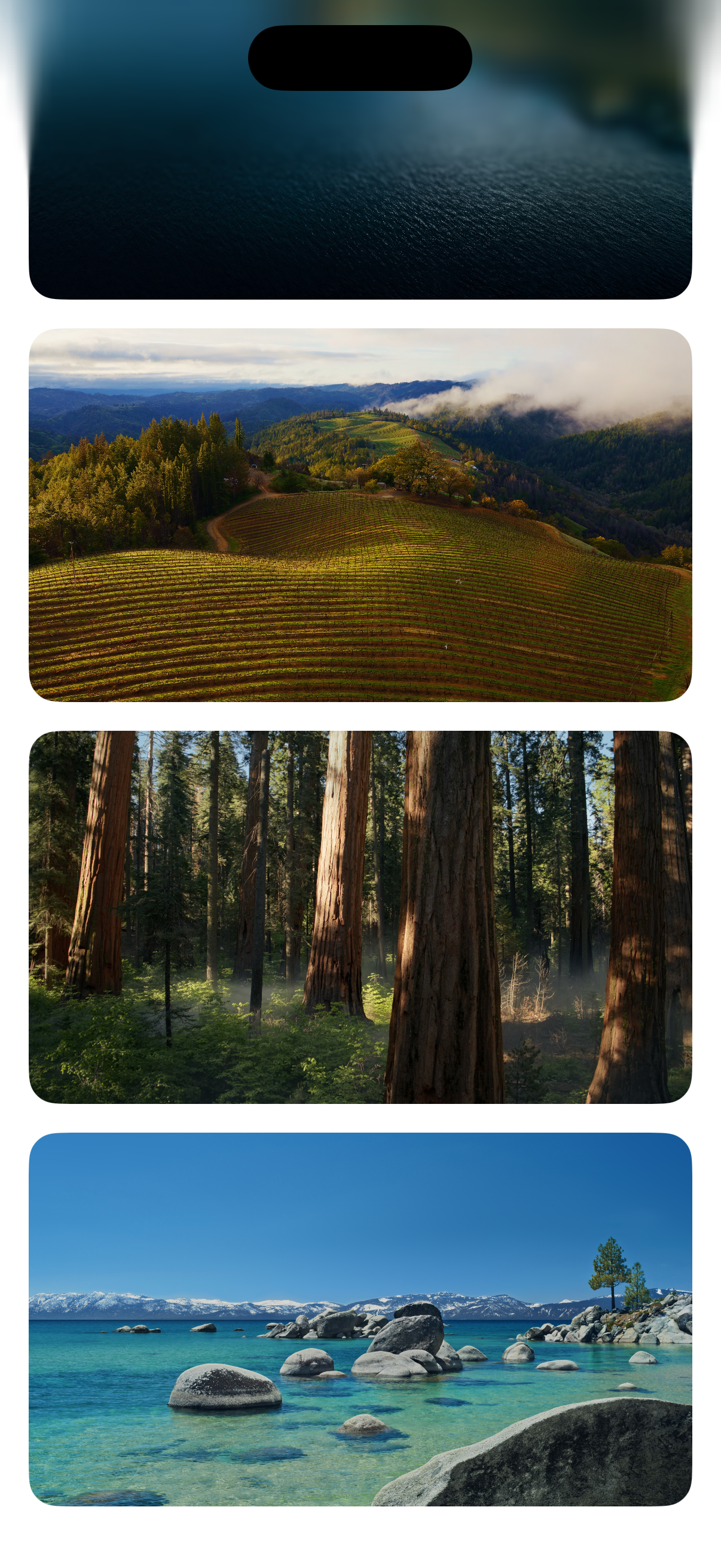

Here’s how the mask generated looks like:

┌────────────────┐

│░░░░░░░░░░░░░░░░│

│░░░░░░░░░░░░░░░░│

│▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒│

│▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓│

│████████████████│

└────────────────┘Signed Distance Fields (SDF)

Signed Distance Fields (SDF)

In mathematics and its applications, the signed distance function or signed distance field (SDF) is the orthogonal distance of a given point x to the boundary of a set Ω in a metric space (such as the surface of a geometric shape), with the sign determined by whether or not x is in the interior of Ω.

The function has positive values at points x inside Ω, it decreases in value as x approaches the boundary of Ω where the signed distance function is zero, and it takes negative values outside of Ω.

However, the alternative convention is also sometimes taken instead (i.e., negative inside Ω and positive outside). The concept also sometimes goes by the name oriented distance function/field.

SDFs compute the distance from any point to a shape’s boundary:

float rounded_rect_sdf(float2 p, float2 half_size, float radius) {

float r = clamp(radius, 0.0f, min(half_size.x, half_size.y));

float2 d = abs(p) - (half_size - float2(r));

float outside = length(max(d, float2(0.0f)));

float inside = min(max(d.x, d.y), 0.0f);

return outside + inside - r;

}Visualization

Outside (dist > 0)

▲

│ +2

│ +1

──────────┼────────── Shape Boundary (dist = 0)

│ -1

│ -2

▼

Inside (dist < 0)Why do we use SDFs here?

- Perfect anti-aliasing at any scale

- Resolution-independent

- Mathematical precision

- GPU-friendly calculations

Distance-to-Alpha Conversion

Convert distance to smooth opacity using Hermite smoothstep:

float distance_to_alpha(float dist, float fade_width) {

if (fade_width <= 0.0f) {

return (dist >= 0.0f) ? 1.0f : 0.0f; // Hard edge

}

// Normalize to [0,1] range

float t = clamp(1.0f + dist / fade_width, 0.0f, 1.0f);

// Hermite smoothstep: 3t² - 2t³

return t * t * (3.0f - 2.0f * t);

}Visualization

Alpha

1.0 ┤ ╭────────

│ ╭─╯

0.5 │ ╭─╯

│ ╭─╯

0.0 └──╯─────────────> DistanceMathematical Properties:

- Smooth start (zero derivative at t=0)

- Smooth end (zero derivative at t=1)

- No visible banding or aliasing

- Perceptually uniform fade

Easing Functions

Hermite Smoothstep

Alpha

1.0 ┤ ╭────

│ ╭─╯

0.5 │ ╭─╯

│ ╭─╯

0.0 └──╯────────────> Position- Smooth acceleration and deceleration

- C¹ continuous (smooth first derivative)

- Visually most pleasing for anti-aliasing

Quadratic Ease-In

Alpha

1.0 ┤ ╭─

│ ╭─╯

0.5 │ ╭──╯

│ ╭──╯

0.0 └─╯─────────────> Position- Faster acceleration

- More dramatic transition

- Good for emphasis effects

Core Image

We rely on Core Image to compile and run our Metal-based mask shaders, then to render those masks into CGImages for the progressive blur pipeline.

It loads our precompiled metallib, constructs CIColorKernels from it, and keeps a shared CIContext for rendering.

Each concrete cache exposes specific kernels by name, all created through CIColorKernel(functionName:fromMetalLibraryData:) so they can be reused without recompiling.

When the blur view needs a mask, PBUIView.generateMaskImage chooses the right kernel and asks CIKernelCache.generateCGImage to run it; Core Image executes the kernel with the provided arguments and returns a CGImage.

That CGImage is then fed into the private CAFilter as the mask for the variable blur effect.

┌─────────────────────────────────────────────────────────┐

│ 1. Layout Change Detected │

│ PBUIView.layoutSubviews() │

└────────────────┬────────────────────────────────────────┘

▼

┌─────────────────────────────────────────────────────────┐

│ 2. Check if Mask Needs Regeneration │

│ Compare MaskSignature (size, scale, params) │

└────────────────┬────────────────────────────────────────┘

▼

┌─────────────────────────────────────────────────────────┐

│ 3. Calculate Pixel Dimensions │

│ widthPx = bounds.width × screen.scale │

│ heightPx = bounds.height × screen.scale │

└────────────────┬────────────────────────────────────────┘

▼

┌─────────────────────────────────────────────────────────┐

│ 4. Select Metal Kernel │

│ Based on MaskType (linear/rounded/squircle) │

└────────────────┬────────────────────────────────────────┘

▼

┌─────────────────────────────────────────────────────────┐

│ 5. Execute Metal Kernel (GPU) │

│ kernel.apply(extent: rect, arguments: [...]) │

└────────────────┬────────────────────────────────────────┘

▼

┌─────────────────────────────────────────────────────────┐

│ 6. Generate CIImage │

│ Metal shader returns CIImage (grayscale mask) │

└────────────────┬────────────────────────────────────────┘

▼

┌─────────────────────────────────────────────────────────┐

│ 7. Convert to CGImage │

│ context.createCGImage(ciImage, from: extent) │

└────────────────┬────────────────────────────────────────┘

▼

┌─────────────────────────────────────────────────────────┐

│ 8. Apply to CAFilter │

│ filter.setValue(cgImage, forKey: "inputMaskImage") │

└─────────────────────────────────────────────────────────┘Core Animation

In this architecture, we use Core Animation to get the backdrop layer and apply the CIFilter to it via runtime reflection.

static var filterClassName: String {

decode("Q0FGaWx0ZXI=") // "CAFilter"

}

static var filterMethodName: String {

decode("ZmlsdGVyV2l0aFR5cGU6") // "filterWithType:"

}

static var filterTypeName: String {

decode("dmFyaWFibGVCbHVy") // "progressiveBlur"

}

static var radiusKey: String {

decode("aW5wdXRSYWRpdXM=") // "inputRadius"

}

static var maskKey: String {

decode("aW5wdXRNYXNrSW1hZ2U=") // "inputMaskImage"

}

static var normalizeKey: String {

decode("aW5wdXROb3JtYWxpemVFZGdlcw") // "inputNormalizeEdges"

}

// 1. Get CAFilter class via reflection

let filterClass = NSClassFromString("CAFilter") as? NSObject.Type

// 2. Create progressive Blur filter instance

let filter = filterClass.perform(

NSSelectorFromString("filterWithType:"),

with: "progressiveBlur"

).takeUnretainedValue() as? NSObject

// 3. Configure filter parameters

filter.setValue(maxBlurRadius, forKey: "inputRadius")

filter.setValue(true, forKey: "inputNormalizeEdges")

// 4. Apply to backdrop layer (the actual content)

let backdropLayer = subviews.first?.layer

backdropLayer?.filters = [filter]

// 5. Remove default tint overlay

for subview in subviews.dropFirst() {

subview.alpha = 0

}Preview